PixVerse-R1: Next-Generation Real-Time World Model

We present PixVerse-R1, a next-generation real-time world model architected upon a native multimodal foundation model. This system enables real-time video generation where visual content responds instantly and fluidly to user input.

PixVerse-R1: Next-Generation Real-Time World Model

Abstract

We present PixVerse-R1, a next-generation real-time world model architected upon a native multimodal foundation model. This system enables real-time video generation where visual content responds instantly and fluidly to user input. By overcoming the intrinsic latency and fixed-length constraints of traditional video workflows, PixVerse-R1 transforms video generation into an infinite, continuous, and interactive visual stream. This represents a significant evolution in the creation, experience, and sharing of audiovisual media, marking a paradigm shift toward intelligent, interactive media capable of instantaneous adaptation based on user intent.

1. Introduction

The digital media landscape is shifting fundamentally from static, pre-rendered content toward dynamic, interactive experiences. Conventional production pipelines have historically been constrained by high latency and fixed-length clips, creating a dichotomy between content creation and real-time consumption.

To address these limitations, we introduce a novel world model architecture that unifies a native multimodal foundation model, a consistency autoregressive mechanism, and an instantaneous response engine. This unified approach allows for the joint processing of spatiotemporal patches alongside text and audio data, effectively dismantling traditional media processing silos. By deploying a system capable of infinite streaming via an autoregressive mechanism and an instantaneous response engine, the generated world remains physically consistent over long horizons with low computational overhead.

Key Capability: Leveraging this architecture, our system achieves a breakthrough in performance, generating high-resolution video up to 1080P in real-time. This capability enhances visual fidelity and enables AI-native gaming and interactive cinema, where environments and narratives evolve dynamically in response to user interaction. Broadly, this allows generative systems to function as persistent, interactive worlds rather than finite media artifacts, indicating a trajectory toward continuous, stateful, and interactive audiovisual simulations.

2. Technical Architecture

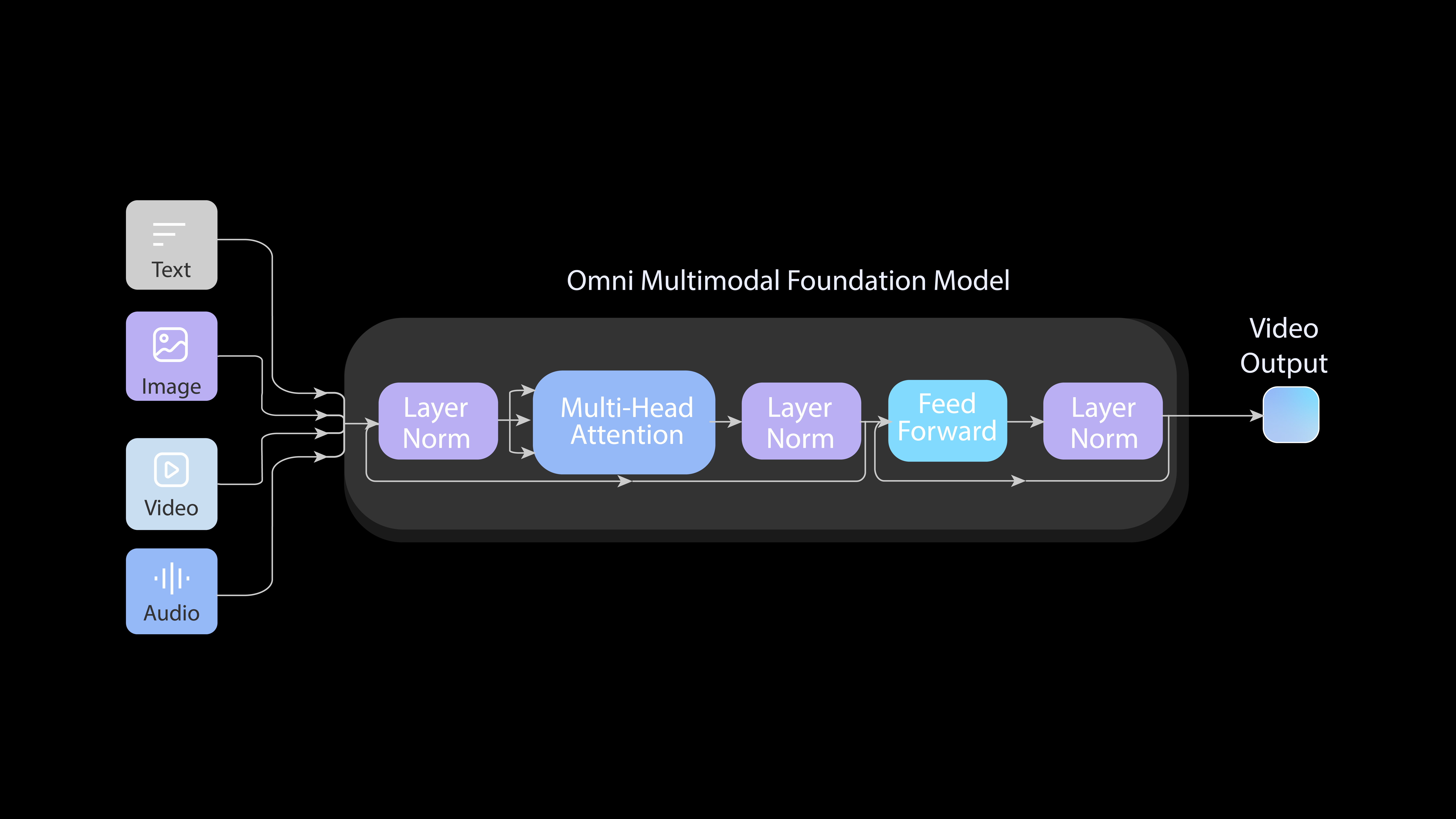

2.1 Omni: Native Multimodal Foundation Model

To attain general capabilities, we transcended traditional generation pipelines by designing a fully end-to-end Native Multimodal Foundation Model.

- Unified Representation: The Omni-model unifies diverse modalities (text, image, video, audio) into a continuous stream of tokens, allowing it to accept arbitrary multimodal inputs within a single framework.

- End-to-End Training: The entire architecture is trained across heterogeneous tasks without intermediate interfaces, preventing error propagation and ensuring robust scalability.

- Native Resolution: We utilize native resolution training within this framework to avoid artifacts typically associated with cropping or resizing.

Furthermore, the model internalizes the intrinsic physical laws and dynamics of the real world by learning from a massive corpus of real-world video data. This foundational understanding empowers the system to synthesize a consistent, responsive “parallel world” in real-time.

The Omni-model scales effectively, functioning not merely as a generative engine, but as a pioneering step towards building general-purpose simulators of the physical world. By treating the simulation task as a singular, end-to-end generation paradigm, we facilitate the exploration of real-time, long-horizon AI-generated worlds.

Figure 1. The end-to-end architecture of our Omni Native Multimodal Foundation Model, the unified design enables our Omni-model to accept arbitrary multimodal inputs and generate audio and video at the same time.

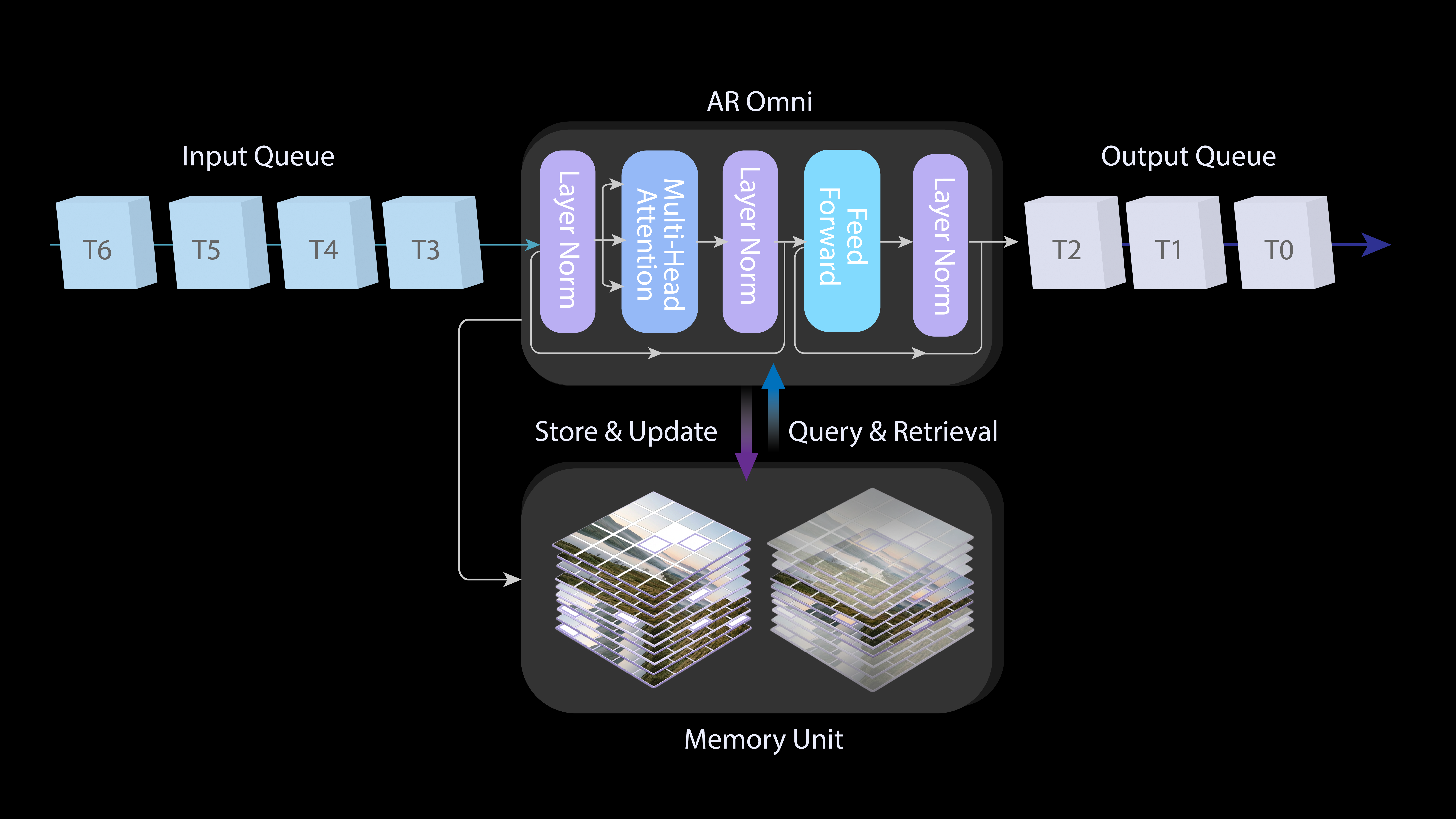

2.2 Memory: Consistent Infinite Streaming via Autoregressive Mechanism

Unlike standard diffusion methods restricted to finite clips, PixVerse-R1 integrates autoregressive modeling to enable infinite, continuous visual streaming, and incorporates a memory-augmented attention mechanism to ensure the generated world remains physically consistent over long horizons.

- Infinite Streaming: By formulating video synthesis as an autoregressive process, the model sequentially predicts subsequent frames to achieve continuous, unbounded visual streaming.

- Temporal Consistency: A memory-augmented attention mechanism conditions the generation of the current frame on the latent representations of the preceding context, ensuring the world remains physically consistent over long horizons.

Figure 2. The integrated autoregressive modeling with the Omni foundation model.

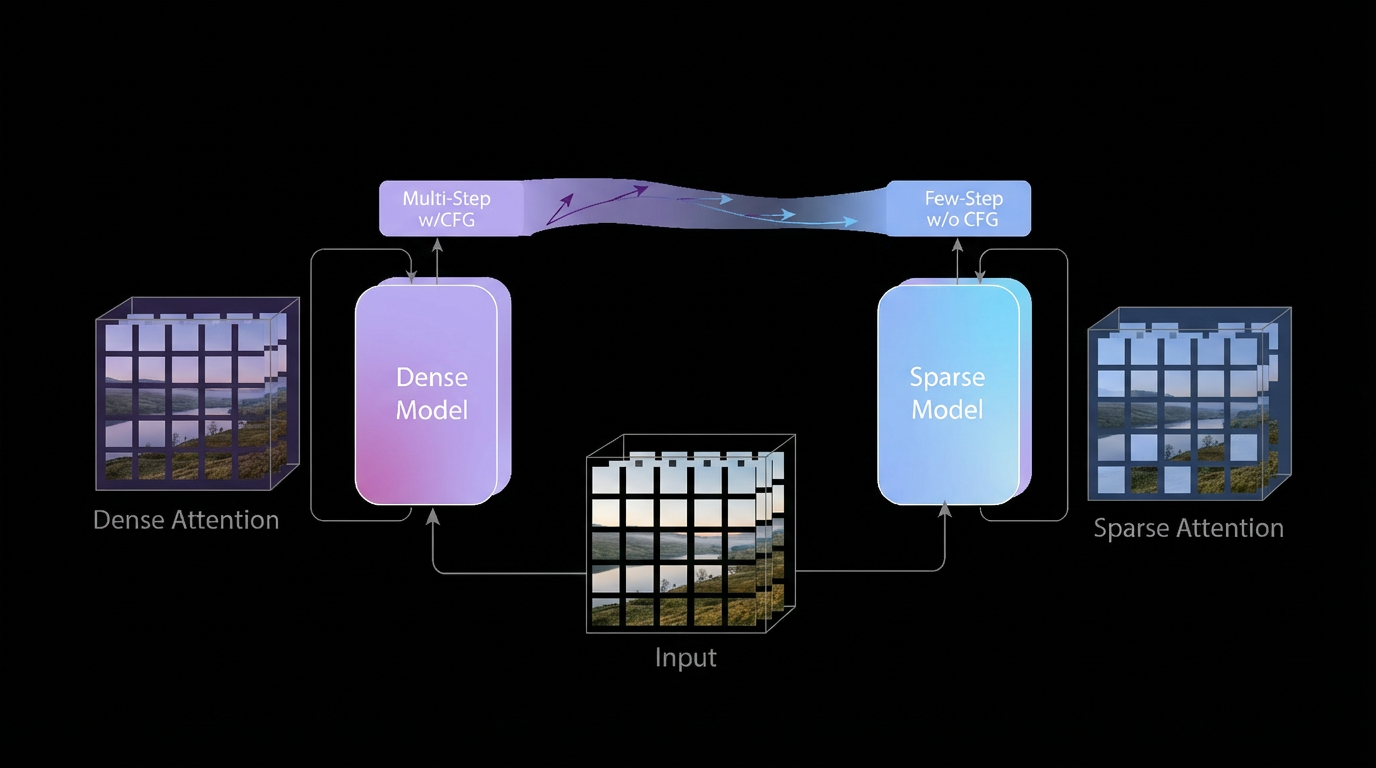

2.3 Real-time 1080P: Instantaneous Response Engine

While iterative denoising typically ensures high quality, its computational density often impedes real-time performance. To resolve this and achieve real-time generation at high resolutions (up to 1080P), we re-architected the pipeline into an Instantaneous Response Engine.

The IRE optimizes the sampling process through the following advancements:

- Temporal Trajectory Folding: By implementing Direct Transport Mapping as a structural prior, the network predicts the clean data distribution directly. This reduces sampling steps from dozens to merely 1–4, creating a streamlined pathway essential for ultra-low latency.

- Guidance Rectification: We bypass the sampling overhead of Classifier-Free Guidance by merging conditional gradients into the student model.

- Adaptive Sparse Attention: This mitigates long-range dependency redundancy, yielding a condensed computational graph that further facilitates the realization of real-time 1080P generation.

Figure 3. The instantaneous response engine consists of three modules: temporal trajectory folding, guidance rectification and adaptive sparse attention learning.

3. Applications and Social Impact

PixVerse-R1 introduces a new generative medium: real-time, continuous, and stateful audiovisual systems. Unlike pre-rendered video, this medium operates as a persistent process that responds instantly to user intent, where generation and interaction are tightly coupled. This new medium enables a broad class of interactive systems, including but not limited to:

-

Interactive Media

- AI-native games and interactive cinematic experiences

- Real-time VR/XR and immersive simulations

-

Creative and Educational Systems

- Adaptive media art and interactive installations

- Real-time learning and training environments

-

Simulation and Planning

- Experimental research and scenario exploration

- Industrial, agricultural, and ecological simulations

Beyond specific applications, PixVerse-R1 functions as a continuous audiovisual world simulator, reducing the distance between human intent and system response, and enabling new forms of human–AI co-creation within persistent digital environments.

4. Conclusion

PixVerse-R1 introduces a real-time generative framework that overcomes the inherent limitations of traditional video workflows through architectural innovations in multimodal processing and instantaneous response. By enabling consistent, real-time generation, this model marks a significant evolution in the creation and experience of audiovisual media. The shift toward real-time latency enables a transition from static content consumption to dynamic environment interaction, providing a scalable computational substrate for applications ranging from AI-native gaming to complex industrial simulations. By bridging the gap between user intent and instantaneous visual feedback, the system establishes a new frontier for interactive world modeling and human-AI collaborative environments.

5. Limitations

While PixVerse-R1 offers significant modeling advantages, two primary constraints persist regarding temporal accuracy and physical fidelity:

- Temporal Error Accumulation: Over extended sequences, minor prediction errors may accumulate, potentially compromising the structural integrity of the simulation.

- Physics vs. Computation Trade-off: To successfully achieve real-time generation, specific sacrifices were made regarding generation complexity. Consequently, there may be a certain degree of loss in the precise rendering of some physical laws compared to non-real-time models.